An order-of-magnitude leap for accelerated computing.

The NVIDIA H100 Tensor Core GPU delivers unprecedented acceleration at every scale for AI, data analytics, and high-performance computing (HPC) to tackle the world’s toughest computing challenges. With NVIDIA® NVLink® Switch System, up to 256 H100s can be connected to accelerate exascale workloads, along with a dedicated Transformer Engine to solve trillion-parameter language models. H100’s combined technology innovations can speed up large language models by an incredible 30X over the previous generation to deliver industry-leading conversational AI.

GET YOUR FREE TEST DRIVE

Technical Data

| FP64 | 26 TF |

| FP64 Tensor Core | 51 TF |

| FP32 | 51 TF |

| TF32 Tensor Core | 756 TF |

| BFLOAT16 Tensor Core | 1,513 TF |

| FP16 Tensor Core | 1,513 TF |

| INT8 Tensor Core | 3,026 TOPS |

| GPU memory | 80 GB |

| GPU memory bandwidth | 2 TB/s |

| Max thermal design power (TDP) | 300 bis 350 W (configurable) |

| Multi-Instance-GPUs | Up to 7 MIGs @ 10 GB each |

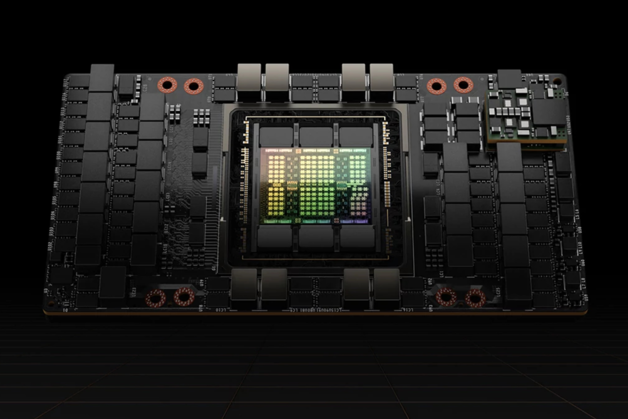

NVIDIA H100

PCIe

| Form factor | PCIe Dual-slot air-cooled |

| Interconnect | NVLINK: 600GB/s PCIe Gen5: 128 GB/s |

Technical Data

| FP64 | 34 TF |

| FP64 Tensor Core | 67 TF |

| FP32 | 67 TF |

| TF32 Tensor Core | 989 TF |

| BFLOAT16 Tensor Core | 1,979 TF |

| FP16 Tensor Core | 1,979 TF |

| INT8 Tensor Core | 3,958 TOPS |

| GPU memotry | 80 GB |

| GPU memory bandwidth | 3.35 TB/s |

| Max thermal design power (TDP) | Up to 700 W (configurable) |

| Multi Instance GPUs | Up to 7 MIGs @ 10 GB each |

NVIDIA H100

SXM

| Form factor | SXM |

| Interconnect | NVLINK: 900GB/s PCIe Gen5: 128 GB/s |