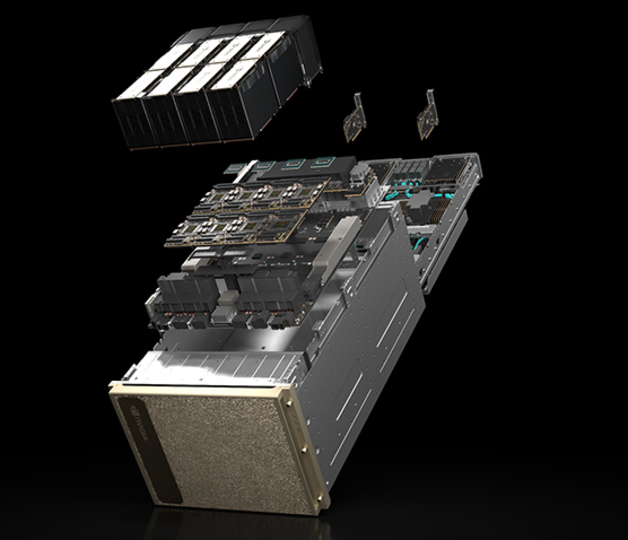

NVIDIA DGX H100 Datasheet

Download

Explore DGX H100

- 8 NVIDIA H100-GPUs with 640 GB of Total GPU Memory

- 18 NVIDIA® NVLink® connections per GPU, 900 GB/s of bidirectional GPU-to-GPU bandwith

- 18 NVIDIA® NVLink® connections per GPU, 900 GB/s of bidirectional GPU-to-GPU bandwith

- 4 NVIDIA NVSwitchesTM

- 7.2 TB/s of bidirectional GPU-to-GPU bandwith, 1.5x more than previous generation

- 7.2 TB/s of bidirectional GPU-to-GPU bandwith, 1.5x more than previous generation

- 10 NVIDIA CONNECTX®-7 400 GB/s Network Interface

- 1 TB/s of peak bidirectional network bandwith

- 1 TB/s of peak bidirectional network bandwith

- Dual Intel Xeon Platinum 8480C processors, 112 cores total, and 2 TB System Memory

- Powerful CPUs for the most intensive AI jobs

- Powerful CPUs for the most intensive AI jobs

- 30 TB NVMe SSD

- High speed storage for maximum performance