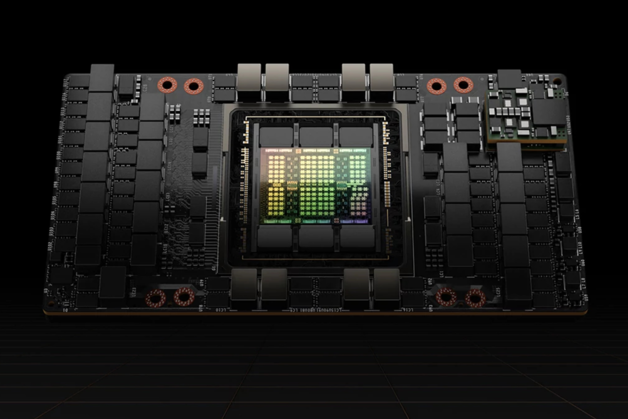

The GPU for Generative AI and HPC

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.

With the latest Blackwell B200 GPU, NVIDIA offers even greater energy efficiency, bandwidth, and computing power.

GET YOUR FREE TEST DRIVE

NVIDIA B200 Datasheet

Download

Technical Data

| FP64 | 34 TF |

| FP64 Tensor Core | 67 TF |

| FP32 | 67 TF |

| TF32 Tensor Core | 989 TF |

| BFLOAT16 Tensor Core | 1,979 TF |

| FP16 Tensor Core | 1,979 TF |

| INT8 Tensor Core | 3,958 TOPS |

| GPU memory | 141 GB |

| GPU memory bandwidth | 4.8 TB/s |

| Max thermal design power (TDP) | up to 600 W (configurable) |

| Multi-Instance-GPUs | Up to 7 MIGs @ 16.5 GB each |

NVIDIA H200

NVL

| Form factor | PCIe |

| Interconnect | 2- or 4-way NVIDIA NVLink bridge: 900GB/s PCIe Gen5: 128GB/s |

Technical Data

| FP64 | 34 TF |

| FP64 Tensor Core | 67 TF |

| FP32 | 67 TF |

| TF32 Tensor Core | 989 TF |

| BFLOAT16 Tensor Core | 1,979 TF |

| FP16 Tensor Core | 1,979 TF |

| INT8 Tensor Core | 3,958 TOPS |

| GPU memotry | 141 GB |

| GPU memory bandwidth | 4.8 TB/s |

| Max thermal design power (TDP) | Up to 700 W (configurable) |

| Multi Instance GPUs | Up to 7 MIGs @ 16.5 GB each |

NVIDIA H200

SXM

| Form factor | SXM |

| Interconnect | NVLINK: 900GB/s PCIe Gen5: 128 GB/s |